Ultimate Tripo AI Guide(I): Prompt tips and tricks for text/image-to-3D.

We are pleased to introduce Lyson(Twitter@lyson_ober), a distinguished 3D creator from our esteemed Tripo AI 3D community. Lyson contributed to the comprehensive “Ultimate Tripo AI Guide.” This guide, thoughtfully divided into multiple blogs, will be shared on Medium by Tripo AI, serving as a valuable resource for creators.

Regardless of your skill level, we invite and welcome everyone to become a part of our community. Your unique perspective and experiences contribute to the collective growth of Tripo AI.

Explore Tripo AI: https://www.tripo3d.ai/

Join our Discord: https://discord.gg/chrV6rjAfY

Hello everyone, I’m Lyson!

Over the past year, the GenAI (Generative AI) field has continued to grow rapidly. Just at the beginning of the year, I gave a systematic Midjourney tutorial on Bilibili, and today, the technology for AI-generated 3D models has become increasingly mature. The decreasing learning curve means you can pick up 3D skills faster, allowing everyone to experience the joy of 3D creation.

For example, I recently experimented with Tripo AI + Blender + Magnific AI and got the following result 👇 (swipe left to see the full image):

When I first tried Tripo AI, it felt like rediscovering the joy I had when I first played with the Midjourney V3 model. Another storyline intertwined with 3D generation technology is the advancement in motion capture tech. In the past, we needed expensive equipment to capture high-precision motion data, but now, all it takes is a smartphone.

Many of you have been wondering about the time investment required to learn 3D modeling. It’s substantial! If AI can generate models directly, achieving even 80% completion, not to mention 100%, that would be a huge win. It would save a lot of time, especially for those repetitive, ‘bricklaying’ tasks. This is one of the reasons why Tripo AI excites me!

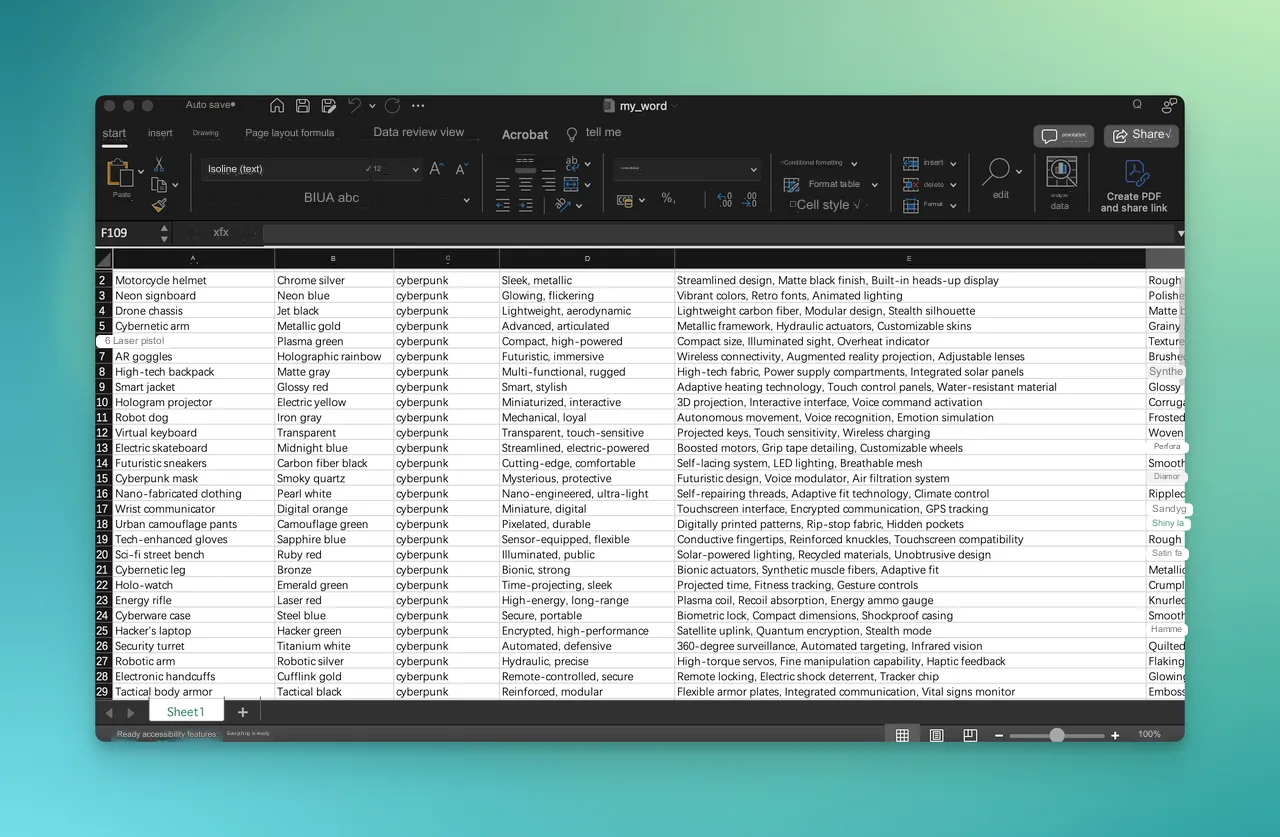

On my first day using it, I wrote Python scripts to batch process hundreds of models to test the limits of Tripo AI’s performance. As we all know, prompts are particularly important in text generation, especially during phases of rapid tool development. So, understanding which prompts work and which needs improvement can save a lot of unnecessary effort.

In the table, I gradually explored the fields and attributes that could be added. Starting from simple adjectives, I moved on to texture, material, color, sheen, and prompt starters (like the word “Masterpiece”). Through experimentation, I mainly discovered the following techniques and conclusions:

1. Currently, models can understand the main subject and brief modifiers well, but overly long texts don’t significantly enhance detail at this stage. So, you should focus more on clearly expressing the main subject and its most prominent features.

2. Color prompts generally work when the result presents a large area of the specified color. Describing more than two colors using language alone is challenging to pinpoint their exact location and design form. It’s more efficient to make such adjustments directly in professional 3D software.

3. A good starting prompt is crucial as it can bring unexpected improvements in texture. Therefore, remember and observe the prompts associated with high-quality outputs, and experiment with them repeatedly. I’ve summarized some effective prompts in Section 2.

4. Describing material is more important than describing light sources. Additionally, the model’s understanding of material reflectiveness is quite precise and deserves more attention.

5. The model generates good details in the first phase, but there’s a chance of encountering the “multi-head problem” during the second Refine phase. However, this can still be easily resolved within the 3D workflow.

Let’s combine these points with some examples to deepen our understanding:

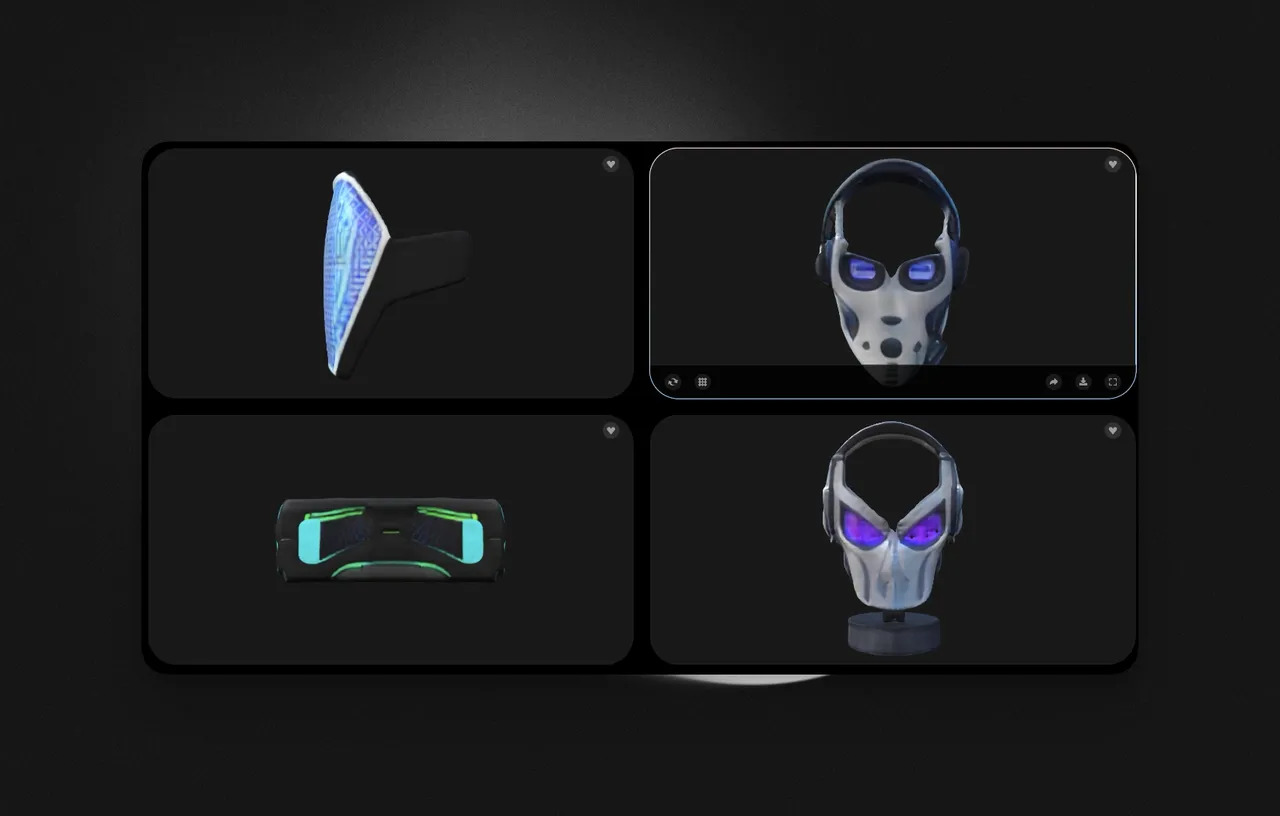

Prompt: Cyberpunk mask, Compact, digital, Futuristic design, Voice modulator, Air filtration system, Quick–release mechanism, Concealed weapon storage, Biometric locking, Textured solar panel, moderate brightness, functional reflectivity, Sophisticated models, Smooth LOD transitions, gradient detail levels

In the above-mentioned prompts, aside from certain more abstract design elements, the model demonstrates a good understanding of other parts of the prompt, especially P4. However, does this mean that longer prompts are more worthwhile? A closer examination of the prompts reveals that actually, only the main subject (mask), the most prominent descriptive modifiers (cyberpunk, futuristic), and the starting phrases (Smooth LOD transitions, gradient detail levels) carry significant weight. Let’s continue by comparing some related examples from the community:

In this example, the prompt is just a single sentence, but because it fully incorporates the “main subject + 1–3 most prominent adjectives + starting phrase” formula I mentioned, it creates an impression of high precision and a silky-smooth surface.

Now, let’s look at another example:

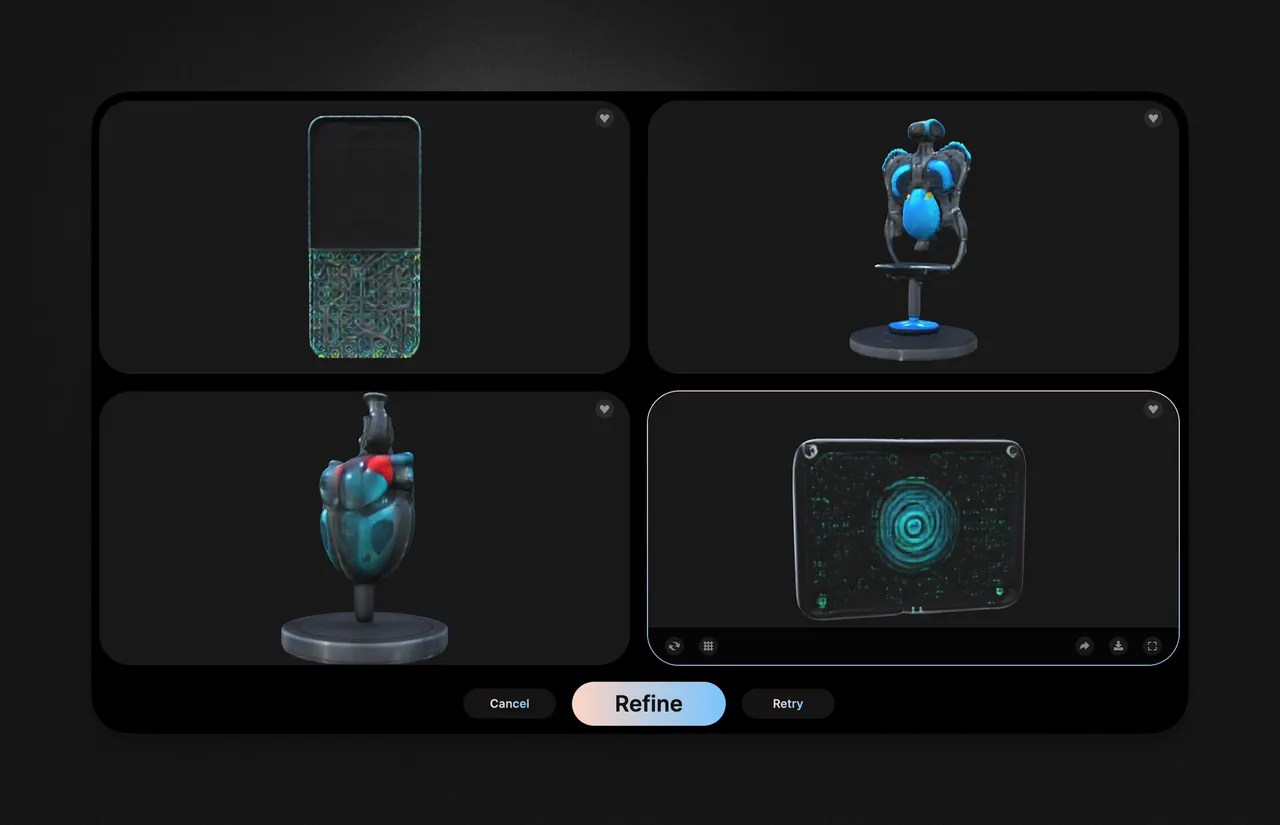

Prompt: Cybernetic heart, display, Lifesaving, mechanical, High–definition screen, Laser–cut steel, Modular seat configuration, Anti–graffiti coating, Shimmering sequin texture, bright appearance, sparkling reflectivity, Realistic fluid dynamics simulation, Precision surface smoothing, artifact–free curvature

In this example, P3’s cyberpunk electronic heart and P4’s futuristic display screen align well with the intent of the prompts. Observing our structure of long prompts, we notice that we haven’t tried to describe the object with too many detailed adjectives. Therefore, apart from the main subject, most of it falls under the category of starting phrases, similar to words like “masterpiece” or “4k.”

However, in 3D, we need to remember some new prompts to achieve better results. For instance: Shimmering sequin texture, bright appearance, sparkling reflectivity, Realistic fluid dynamics simulation, Precision surface smoothing, artifact-free curvature. You might have noticed that the starting phrases include a lot of descriptions about material, reflective effects, and curvature. So, you can also think of starting phrases as these ‘3D characteristics’ that can significantly influence AI output.

On closer inspection, you’ll notice that this prompt seems to have two seemingly parallel subjects: a Cybernetic heart and a display. For Stable Diffusion, such a prompt might result in something blurry or both elements appearing in one image, potentially leading to logical issues in the image.

But in my experiments with Tripo AI, I found that the model tends to focus on drawing one object. Therefore, if your prompt includes 2 objects, you might find that Image 1 is entirely of Object A, while Image 2 is completely generated as Object B.

This gives us an insight into the current stage of AI product development, suggesting a connection to the 3D workflow: focus on generating one item at a time.

Let’s continue with two cases about materials:

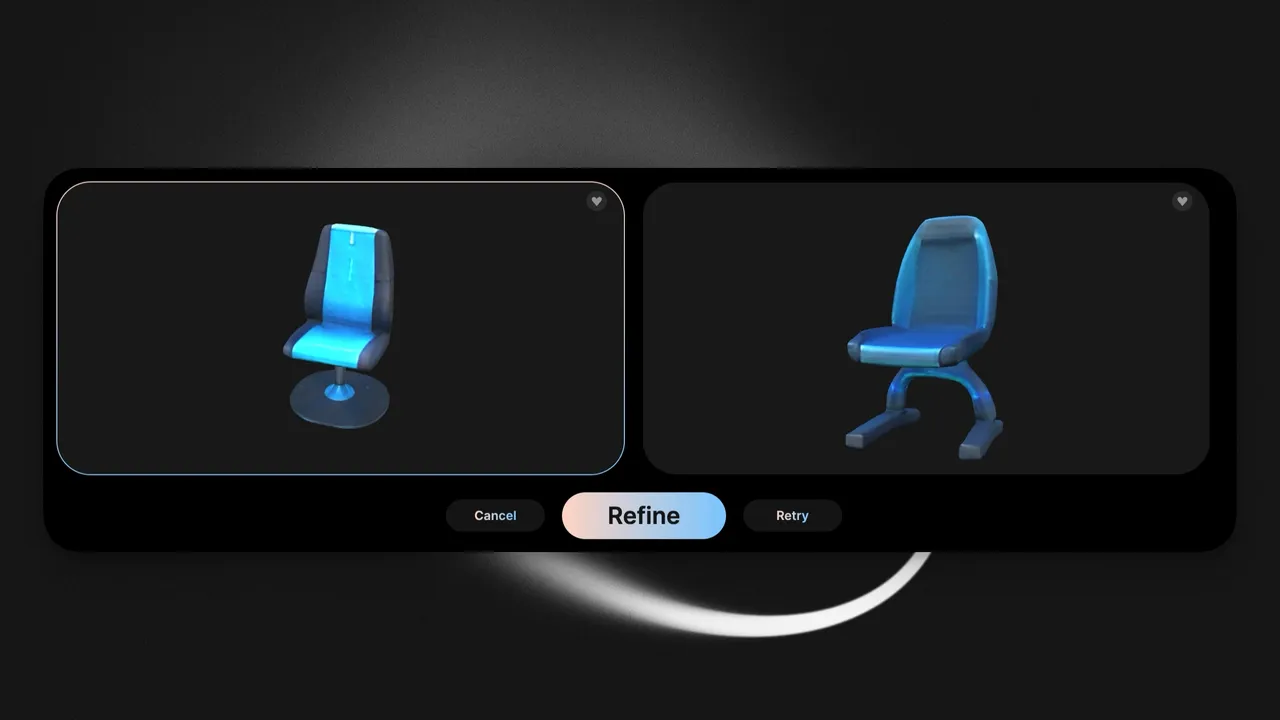

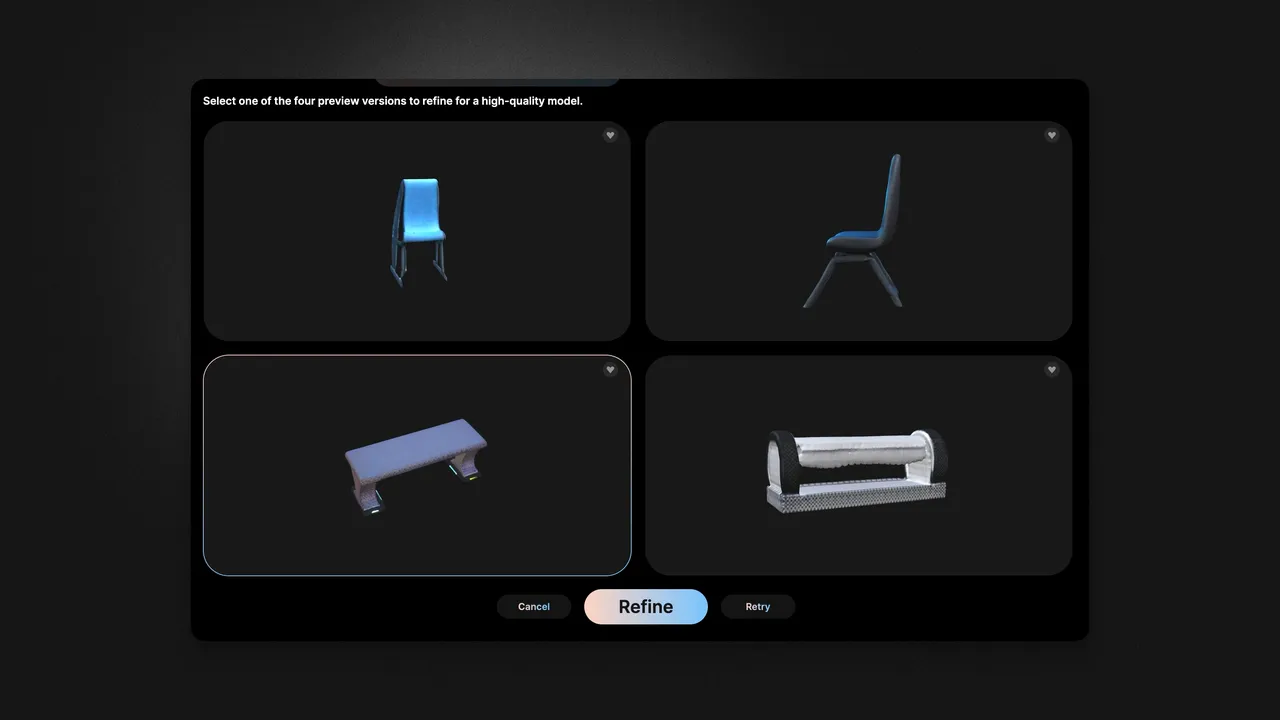

Prompt 1: Sci-fi bench, Durable, rugged, Flush installation, Anti-slip surface, Illuminated edges, Slick oil surface texture, variable brightness, high reflectivity, Seamless 3D integration, Harmonious light mapping, balanced illumination

Prompt 2: Sci-fi bench, Miniaturized, interactive, Flush installation, Anti-slip surface, Illuminated edges, Boosted motors, Grip tape detailing, Customizable wheels, Abrasive sandpaper texture, low brightness, non-reflective, Procedural generation techniques, Seamless mesh, unified surfaces

Particularly noteworthy is the comparison between the chair in the first image and the chairs in P2 and P3 of the second image, focusing on the material characteristics. The descriptions of reflective properties have a significant impact on the generated results, which has been consistently effective in multiple trials. Due to space limitations, I won’t display all examples here.

Moving on, if you’re familiar with 3D modeling, you’d know the importance of ‘symmetry’ in the model creation process. Therefore, if needed, don’t forget to remind the AI specifically to focus on ‘symmetry.’

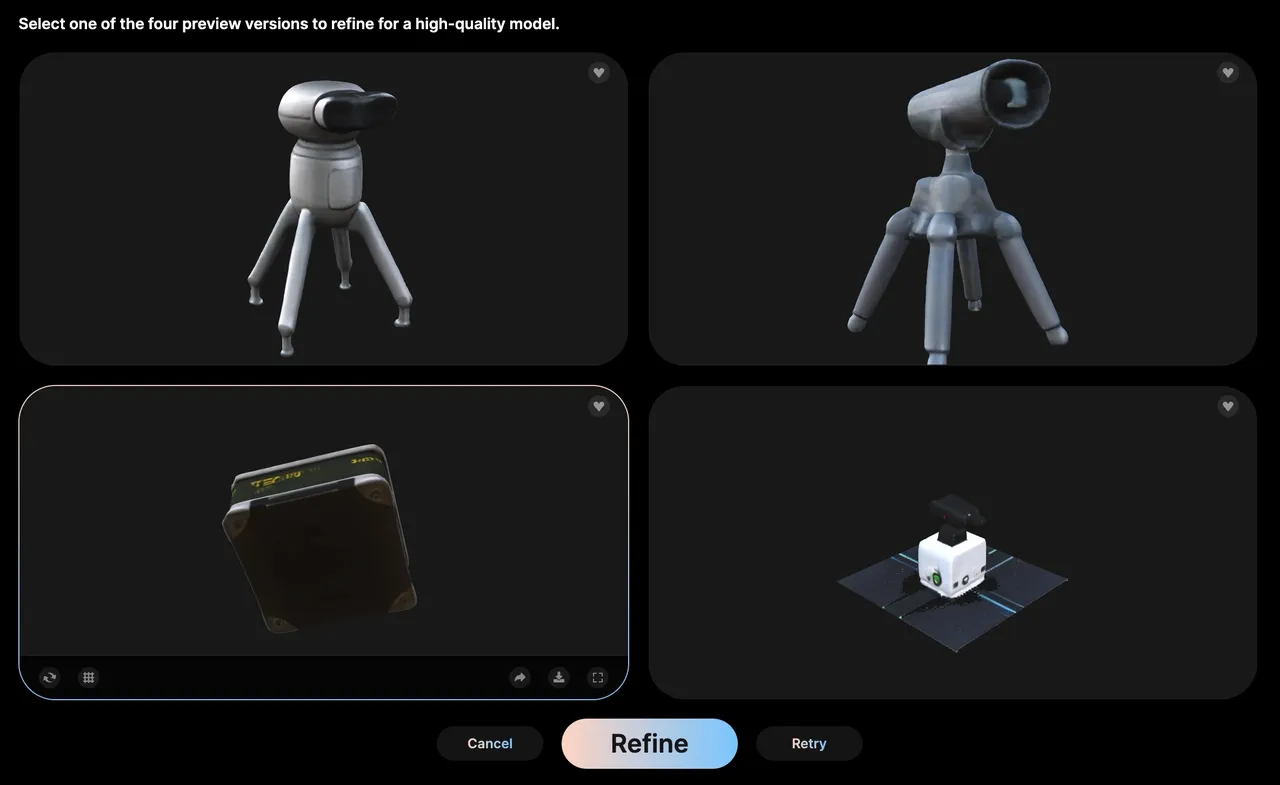

Prompt: Security turret, Tactical, time-telling, 360-degree surveillance, Automated targeting, Infrared vision, Augmented vision, Prescription compatibility, Lightweight frame, Composite fiber paneling, moderate brightness, reduced reflectivity, Immersive world-building, Intentional reflective design, deliberate symmetry

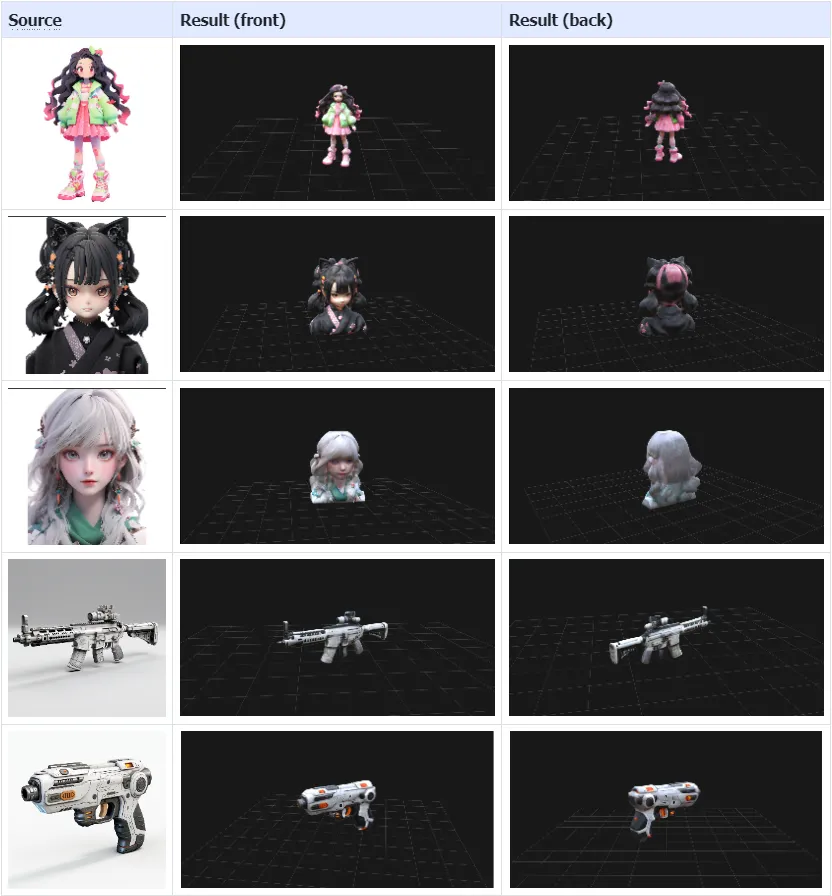

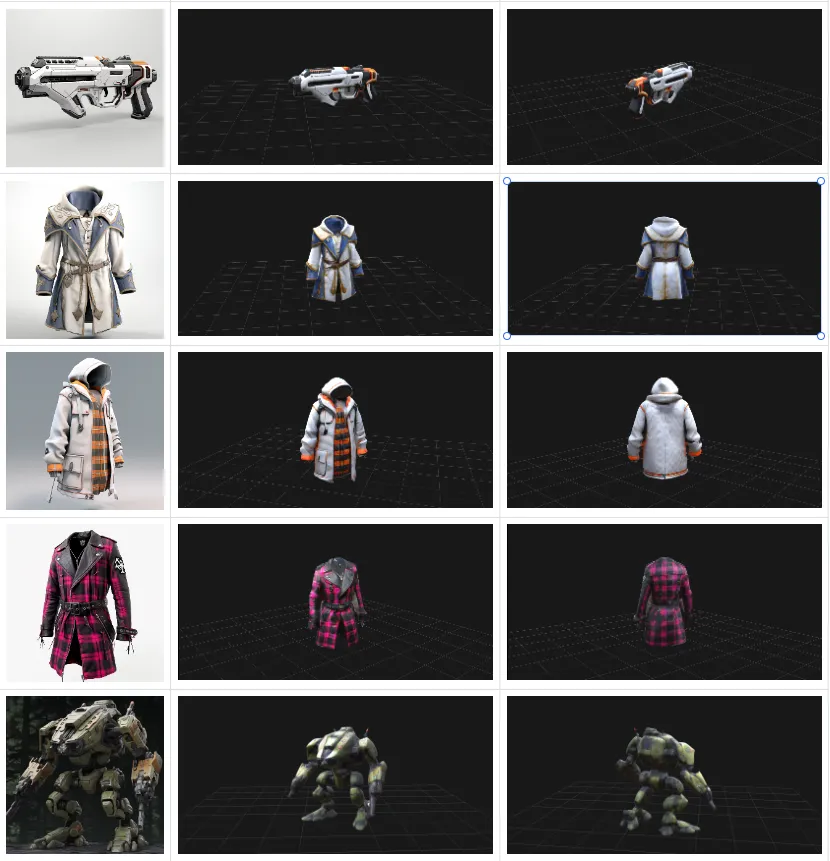

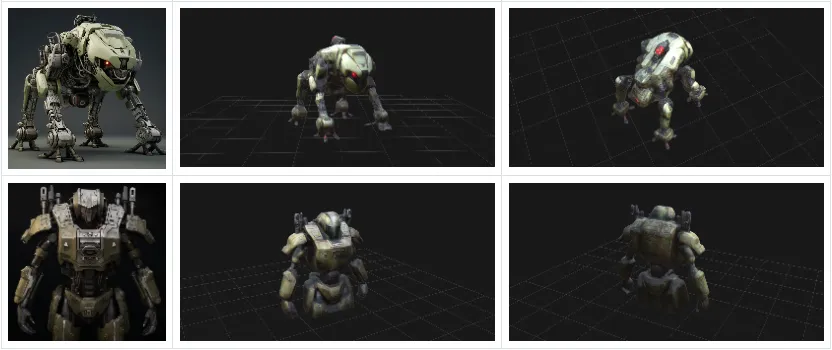

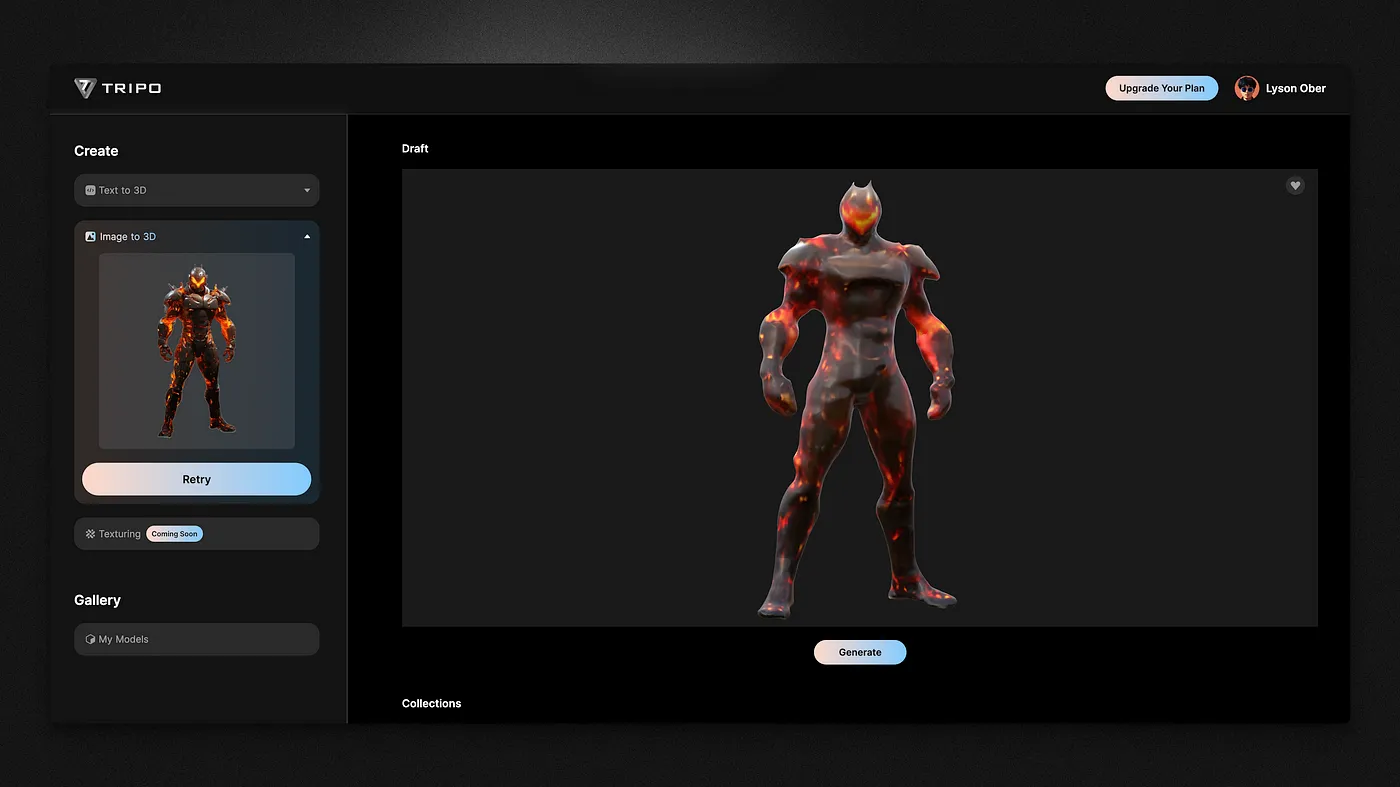

Of course, you can also use the Image to 3D feature, like with this image. When using Tripo AI, select ‘Image to 3D,’ upload your image, and simply click the Draft button. The system will first automatically extract the subject from the image, and then generate the model. Personally, I prefer pre-editing the image (extracting the foreground) in Photoshop to ensure precision in the initial draft, which can sometimes appear blurry when automatically segmented.

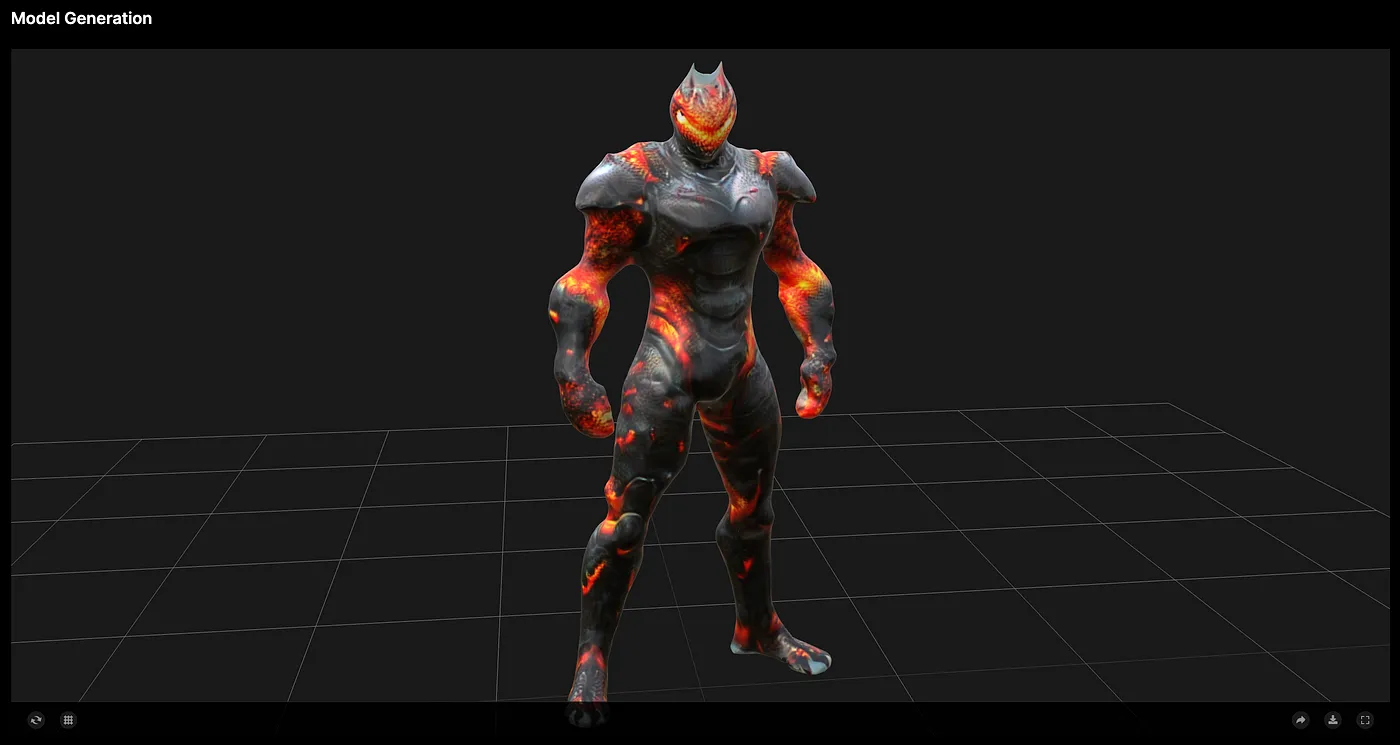

After that, we click on Refine to enhance the model’s precision. The final model obtained is as follows. By clicking download, you can import it into professional 3D software for further refinement:

Here are other Image-to-3D examples 👇: